Style Transfer Learning

Brad Magnetta

September 23, 2019

But is that art?

Art is a tangible thing with an intangible definition. There is no certainty or definite guidelines for what art even really is. Many choose to define art as “anything someone perceives as art” while other definitions attempt with “the expression or application of human creative skill and imagination, typically in a visual form such as painting or sculpture, producing works to be appreciated primarily for their beauty or emotional power.”

There couldn’t be a larger contrast to mathematics where proofs and definitions are foundational. This is why style transfer learning is so interesting. It is a mathematical optimization problem that provides a definite mapping between a visual subject and a new peice of art using the style of an existing visual art.

It is easy to defend that this optimization problem still produces art using my first definition of art, but even if we must use the second it still holds. Humans designed this optimization problem (1), the algorithms used to solve it (2), and the computers that run it. This problem and our ability to solve it is most definitely an example of human skill. Furthermore, our creativity arises from the choice of subject and style. Creations in this way are well documented for insighting emotional power and beauty, but if you keep reading you can be the judge. I think it is helpful to think of the optimization problem as an instrument like a brush.

Technical details

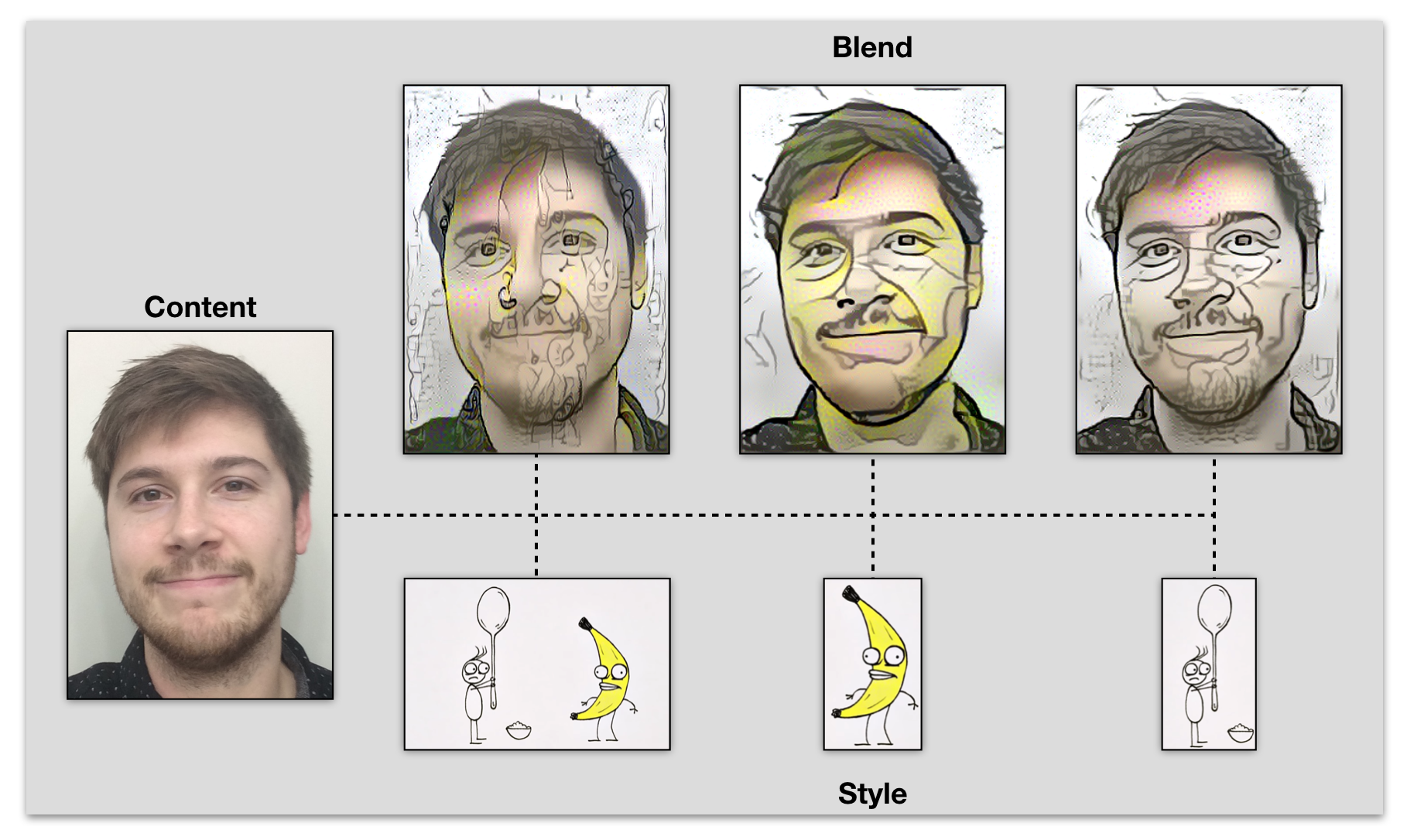

Convolutional neural networks (CNN)s are a class of Deep neural networks. (3) is a great practical reference for CNNs that reformats ideas from (4). The key observation from style transfer learning was that CNNs pre-trained for object detection can be used to disentangle the content and style representation of an image. CNNs use the mathematical concept of convolution and specific “filters” at each layer to produce filter mappings of the image or previous filter map. A filter is nothing more than a matrix, and training uses back propagation to change filter matrices (5). Style transfer uses specific trained filters from a CNN to produce a feature mapping of an image that represents either the content of the image or the style of the image. Once we have disentangled this information, an optimization problem is then used to blend the content from one image with the style of another. (1) defines an optimization problem involving two terms, one for the content representation and one for the style representation, though often a third term for creating continuity between the two representations is used. The algorithm is solved via LFBGS due to the non-convex form. Keras documentation has a great practical implementation of style transfer learning (6). Below we demonstrate results from using this algorithm. The filter, from the pretrained vgg19, that best suits the content and style representations of an image will vary based on the image. In our experience, we can ramp up the size of our generated images by first converging images of low pixel resolution and using it as the starting point for larger setups.

Let there be art